Deepbayes2019 Day 2 Lecture 1 Stochastic Variational Inference And Variational Autoencoders

Deepbayes2019 Day 2 Lecture 1 Stochastic Variational I Slides: github bayesgroup deepbayes 2019 blob master lectures day2 1.%20dmitry%20vetrov%20 %20stochastic%20variational%20inference.pdflecturer: d. Richard zemel coms 4995 lecture 13: variational autoencoders 9 28. observation model. consider training a generator network with maximum likelihood. p(x) = z p(z)p(xjz)dz one problem: if z is low dimensional and the decoder is deterministic, then p(x) = 0 almost everywhere! the model only generates samples over a low dimensional sub manifold of x.

Schematic Representation Of Variational Autoencoders As A Stochastic Now we can proceed to do stochastic variational inference. # set up the optimizer adam params = { "lr" : 0.0005 , "betas" : ( 0.90 , 0.999 )} optimizer = adam ( adam params ) # setup the inference algorithm svi = svi ( model , guide , optimizer , loss = trace elbo ()) n steps = 5000 # do gradient steps for step in range ( n steps ): svi . step. 1; 2) is a random variable choose the with maximum probability maximize the log posterior: = argmax h log p( jx) i = argmax h log p(xj ) p(x) i = 1 1 p n i=1 x i n 1 2 2 less likely to over t: say, 1 = 2 = 2. then seeing 1 sample, x 1 = 1 implies = 2 3 5 45 mle, map, full bayesian full bayesian (goal of variational inference) latent. Enter variational inference, the tool which gives variational autoencoders their name. variational inference for the vae model. variational inference is a tool to perform approximate bayesian inference for very complex models. it's not an overly complex tool, but my answer is already too long and i won't go into a detailed explanation of vi. A twist on normal autoencoders, variational autoencoders (vaes), introduced in 2013, utilizes the unique statistical characteristics of training samples to compress and replenish the original data.

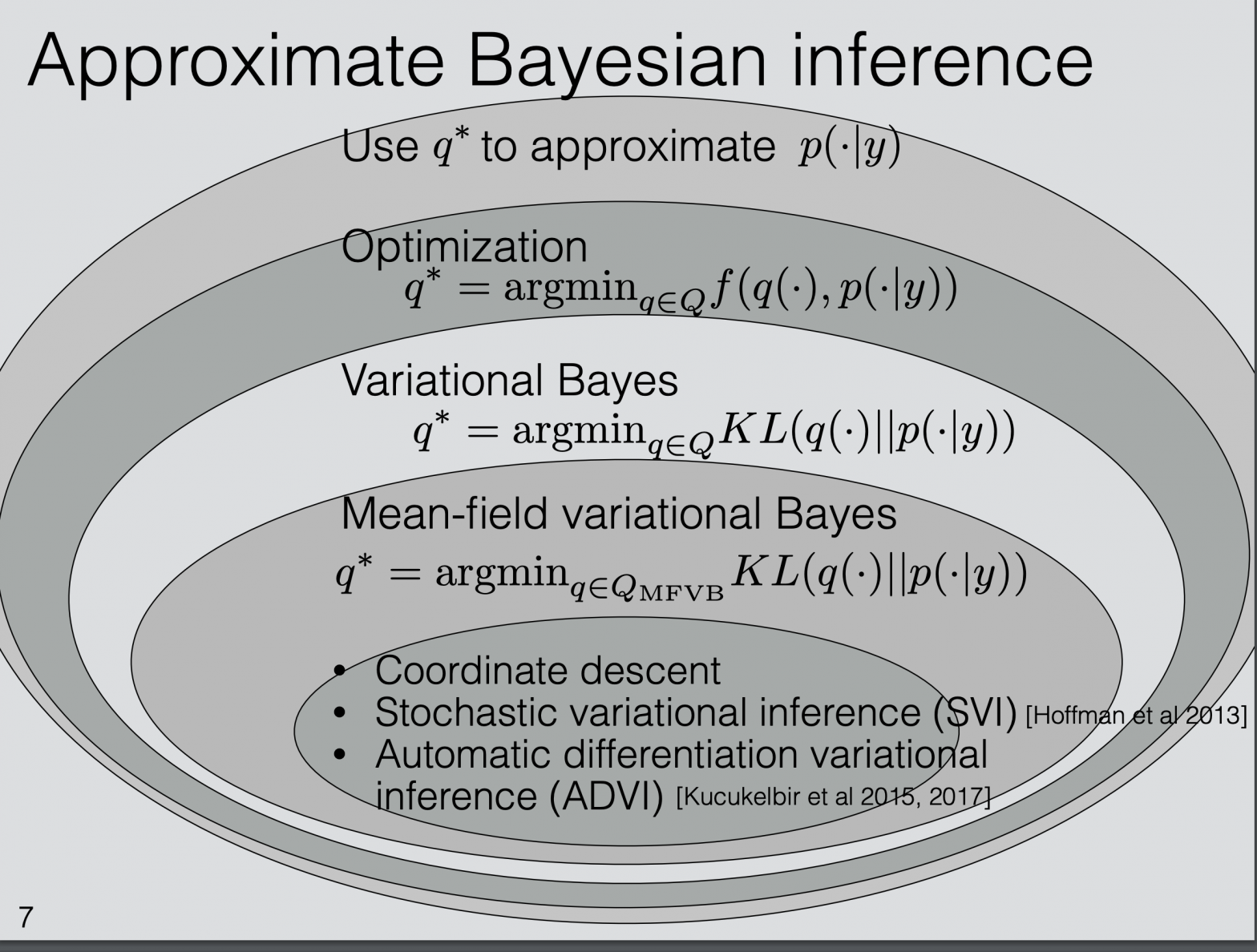

Stochastic Variational Inference вђ Czxttkl Enter variational inference, the tool which gives variational autoencoders their name. variational inference for the vae model. variational inference is a tool to perform approximate bayesian inference for very complex models. it's not an overly complex tool, but my answer is already too long and i won't go into a detailed explanation of vi. A twist on normal autoencoders, variational autoencoders (vaes), introduced in 2013, utilizes the unique statistical characteristics of training samples to compress and replenish the original data. View a pdf of the paper titled an introduction to variational autoencoders, by diederik p. kingma and max welling. variational autoencoders provide a principled framework for learning deep latent variable models and corresponding inference models. in this work, we provide an introduction to variational autoencoders and some important extensions. The most famous algorithms for variational inference are expectation maximization(em) algorithm and variational autoencoders. now before moving to variational autoencoders, let's have a brief.

Comments are closed.