Deploying Kafka With The Elk Stack Dzone

Deploying Kafka With The Elk Stack Dzone Kafka uses zookeeper for maintaining configuration information and synchronization so we'll need to install zookeeper before setting up kafka: 1. 1. sudo apt get install zookeeperd. next, let's. To ship kafka server logs into your own elk, you can use the kafka filebeat module. the module collects the data, parses it and defines the elasticsearch index pattern in kibana. to use the module.

Deploying Kafka With The Elk Stack Dzone Deploying elk stack. once helm is installed, visit the artifact hub — a repository for kubernetes packages — and search for ‘elastic.’. download the official elasticsearch package by. By combining kafka, the elk stack, and docker, we’ve created a robust data pipeline capable of handling real time data streams. this architecture ensures scalability, fault tolerance, and ease of deployment, making it an ideal solution for various data processing scenarios. whether you’re dealing with log files, events, or generating. We log into the azure portal and in the dashboard menu on the left, click on resource group. in our resource group, we click the “add” button, then choose a name and a region, and then click. The most frequent buffer solution used with the elk stack is apache kafka. how and when to deploy all the elements needed to set up an adaptable logs pipeline of apache kafka as well as elk stack between the log delivery and indexing components, acting as a segregation component for the data is being collected: how to utilize all the parts.

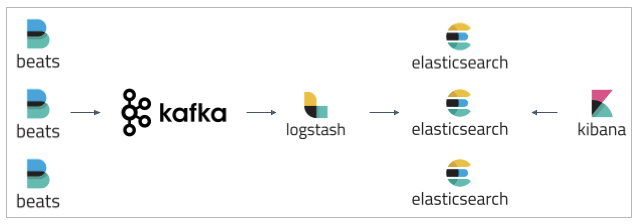

Deploying Kafka With The Elk Stack We log into the azure portal and in the dashboard menu on the left, click on resource group. in our resource group, we click the “add” button, then choose a name and a region, and then click. The most frequent buffer solution used with the elk stack is apache kafka. how and when to deploy all the elements needed to set up an adaptable logs pipeline of apache kafka as well as elk stack between the log delivery and indexing components, acting as a segregation component for the data is being collected: how to utilize all the parts. Kafka acting as a buffer in front of logstash to ensure resiliency, is the best way to deploy the elk stack to reduce logs overload. apache kafka is the most common buffer solution deployed together the elk stack. kafka is deployed between the logs delivery and the indexing units, acting as an segregation unit for the data being collected:. We touched on its importance when comparing with filebeat in the previous article. now to install logstash, we will be adding three components. a pipeline config logstash.conf. a setting config logstash.yml. docker compose file. pipeline configuration will include the information about your input (kafka in our case), any filteration that.

Comments are closed.