Llama 8b Tested A Huge Step Backwards 📉

Emoji F0 9f 8e 89 е ґдѕќе з жќґиў пјѓ Emoji F0 9f 93 B8 дѕ з ќе Full test of llama 3.1 8b. even though it had a huge bump in benchmarks, the results from my test were very disappointing. vultr is empowering the next gener. 🆕 from matthew berman! discover the significant quality leap in llama 3 18b testing and streamline ai model deployment with vulture's support. #ai #qualityimprovement. key takeaways at a glance 1. 00:16 llama 3 18b shows a significant quality improvement over the previous version. 2. 00:29 local model testing with.

Emoji F0 9f 93 A3 Emoji F0 9f 93 A3 Emoji F0 9f Foundation: llama 3.1 8b is built on the transformer architecture, which has become the standard for natural language processing (nlp) tasks. transformers are highly effective at handling sequences of data, such as text, because they can capture long range dependencies between words. self attention mechanism: the model uses a self attention. The llama 3.1 8b model shows a significant quality improvement, especially doubling the human eval benchmark score. livewiki llama 8b tested a huge step backwards 📉. Step 1: education value based curation—they used llama 3.1 instruct 8b to assign an education value (1 5) to all the examples (~2.8m). then, they selected the samples with a score greater than 3. they followed the approach of the fineweb edu dataset. this step reduced the total examples to 1.3m from 2.8 m. In the video, the presenter tests the llama 3.1 8 billion parameter model, highlighting its speed but ultimately expressing disappointment with its performance on coding challenges, logic problems, and moral dilemmas. despite its potential and the benefits of using vulture’s cloud services, the model fails to deliver consistently accurate results, leaving the presenter and the audience.

F0 9f 93 Bb Old Song Status Full Screen 90s Song 4k E2 9d A3 Ef B8 8f Step 1: education value based curation—they used llama 3.1 instruct 8b to assign an education value (1 5) to all the examples (~2.8m). then, they selected the samples with a score greater than 3. they followed the approach of the fineweb edu dataset. this step reduced the total examples to 1.3m from 2.8 m. In the video, the presenter tests the llama 3.1 8 billion parameter model, highlighting its speed but ultimately expressing disappointment with its performance on coding challenges, logic problems, and moral dilemmas. despite its potential and the benefits of using vulture’s cloud services, the model fails to deliver consistently accurate results, leaving the presenter and the audience. The 8b parameter version of llama 3 is really impressive for an 8b parameter model, as it knocks all the measured benchmarks out of the park, indicating a big step up in ability for open source at. If you're confined to this size, the 8b or its derivatives are advisable. however, as is generally the case, larger models tend to be more effective, and i would prefer to run even a small quantization (just not 1 bit) of the 70b over the unquantized 8b. turboderp llama 3 8b instruct exl2 exl2 6.0bpw, 8k context, llama 3 instruct format:.

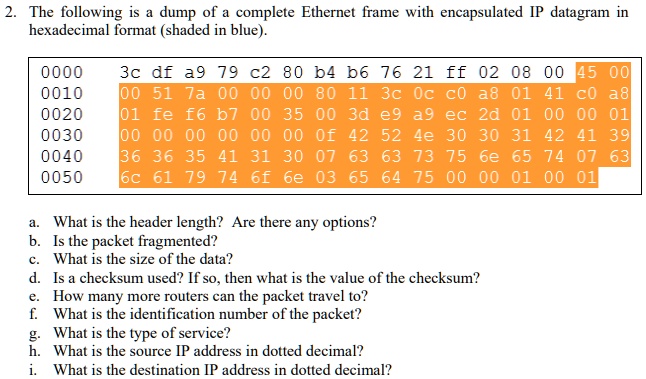

Solved The Following Is A Dump Of A Complete Ethernet Frame With The 8b parameter version of llama 3 is really impressive for an 8b parameter model, as it knocks all the measured benchmarks out of the park, indicating a big step up in ability for open source at. If you're confined to this size, the 8b or its derivatives are advisable. however, as is generally the case, larger models tend to be more effective, and i would prefer to run even a small quantization (just not 1 bit) of the 70b over the unquantized 8b. turboderp llama 3 8b instruct exl2 exl2 6.0bpw, 8k context, llama 3 instruct format:.

Comments are closed.