Shap Values For Beginners What They Mean And Their Applications

Shap Values For Beginners What They Mean And Their Applications Youtube Shap is the most powerful python package for understanding and debugging your machine learning models. we learn to interpret shap values for both continuous. A: yes, shap values can be used for both regression and classification problems. they provide interpretable insights into the impact of features on predictions. q: how can shap values help in debugging machine learning models? a: shap values can help identify the features that have caused incorrect predictions.

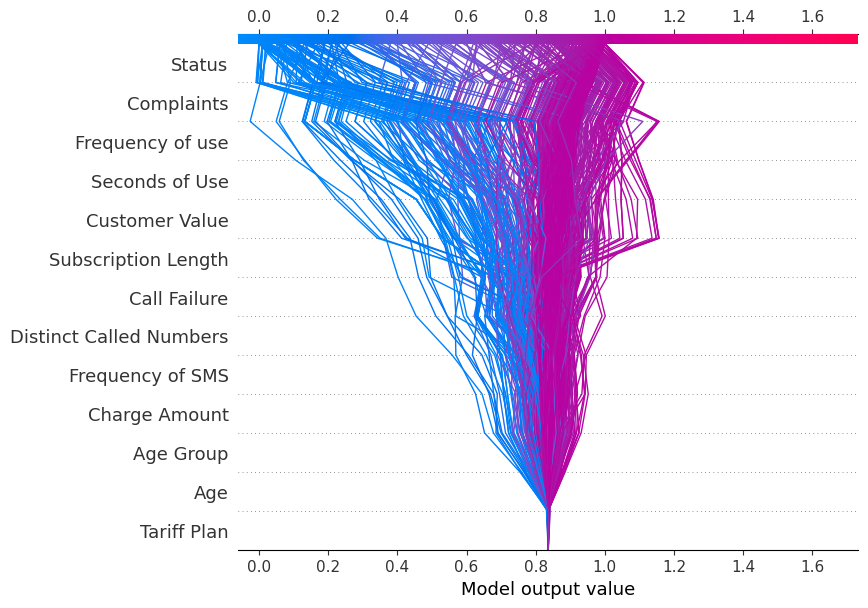

Introduction To Shap Values And Their Application In Machine Learning Shap values in machine learning. shap values are a common way of getting a consistent and objective explanation of how each feature impacts the model's prediction. shap values are based on game theory and assign an importance value to each feature in a model. features with positive shap values positively impact the prediction, while those with. Shap is based on shapley values, a concept borrowed from cooperative game theory. shapley values allocate a contribution to each player in a game, reflecting their individual impact on the outcome. in the context of machine learning, the "players" are the features, and the "game" is the prediction of the model. Shap adapts this concept to ml models by considering each feature value as a “player” in the model. the shap value for a feature measures how much that feature’s value contributes to the difference between the model's predicted output and the average model output. more formally, shap values are based on shapley values from game theory for. Image by author. in the waterfall above, the x axis has the values of the target (dependent) variable which is the house price. x is the chosen observation, f(x) is the predicted value of the model, given input x and e[f(x)] is the expected value of the target variable, or in other words, the mean of all predictions (mean(model.predict(x))).

An Introduction To Shap Values And Machine Learning Interpretability Shap adapts this concept to ml models by considering each feature value as a “player” in the model. the shap value for a feature measures how much that feature’s value contributes to the difference between the model's predicted output and the average model output. more formally, shap values are based on shapley values from game theory for. Image by author. in the waterfall above, the x axis has the values of the target (dependent) variable which is the house price. x is the chosen observation, f(x) is the predicted value of the model, given input x and e[f(x)] is the expected value of the target variable, or in other words, the mean of all predictions (mean(model.predict(x))). As well as shapley values, they proved that shap values also are the unique values to respect the 4 properties which guarantee a f air payout (shap values are some kind of shapley values at the. Note that lightgbm also has gpu support for shap values in its predict method. in catboost, it is achieved by calling get feature importances method on the model with type set to shapvalues. after extracting the core booster model of xgboost, it only took about a second to calculate shapley values for 45k samples: >>> shap values xgb.shape.

Explain Your Model With The Shap Values By Dr Dataman Towards Data As well as shapley values, they proved that shap values also are the unique values to respect the 4 properties which guarantee a f air payout (shap values are some kind of shapley values at the. Note that lightgbm also has gpu support for shap values in its predict method. in catboost, it is achieved by calling get feature importances method on the model with type set to shapvalues. after extracting the core booster model of xgboost, it only took about a second to calculate shapley values for 45k samples: >>> shap values xgb.shape.

Comments are closed.