Spark Converting Python List To Spark Dataframe Spark Pyspark Pyspark Tutorial Pyspark Course

Spark Converting Python List To Spark Dataframe Spark P Structfield('lastname', stringtype(), true) ]) deptdf = spark.createdataframe(data=dept, schema = deptschema) deptdf.printschema() deptdf.show(truncate=false) this yields the same output as above. you can also create a dataframe from a list of row type. # using list of row type. from pyspark.sql import row. In spark, sparkcontext.parallelize function can be used to convert python list to rdd and then rdd can be converted to dataframe object. the following sample code is based on spark 2.x. in this page, i am going to show you how to convert the following list to a data frame: data = [('category a', 100, "this is category a"), ('category b', 120.

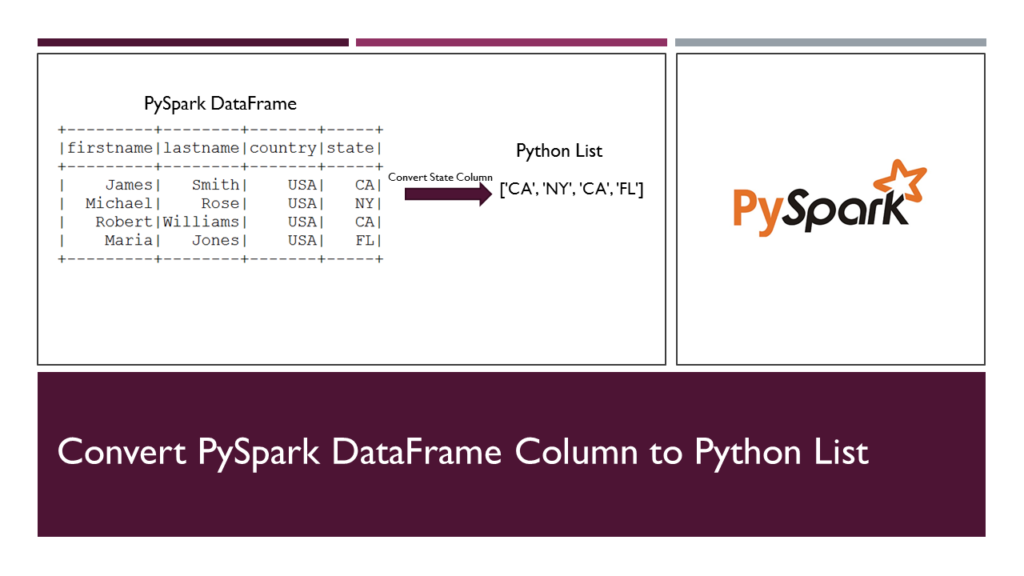

How To Convert Pyspark Column To List Spark By Examples You can use the following methods to create a dataframe from a list in pyspark: method 1: create dataframe from list. from pyspark.sql.types import integertype. #define list of data. data = [10, 15, 22, 27, 28, 40] #create dataframe with one column. df = spark.createdataframe(data, integertype()) method 2: create dataframe from list of lists. Spark: 2.4.0 python: 3.6 convert pyspark dataframe into list of python dictionaries. 0. convert a list of dictionaries into pyspark dataframe. 2. Pyspark tutorial: pyspark is a powerful open source framework built on apache spark, designed to simplify and accelerate large scale data processing and analytics tasks. it offers a high level api for python programming language, enabling seamless integration with existing python ecosystems. advertisements. pyspark revolutionizes traditional. This method is used to create dataframe. the data attribute will be the list of data and the columns attribute will be the list of names. dataframe = spark.createdataframe (data, columns) example1: python code to create pyspark student dataframe from two lists. output: example 2: create a dataframe from 4 lists.

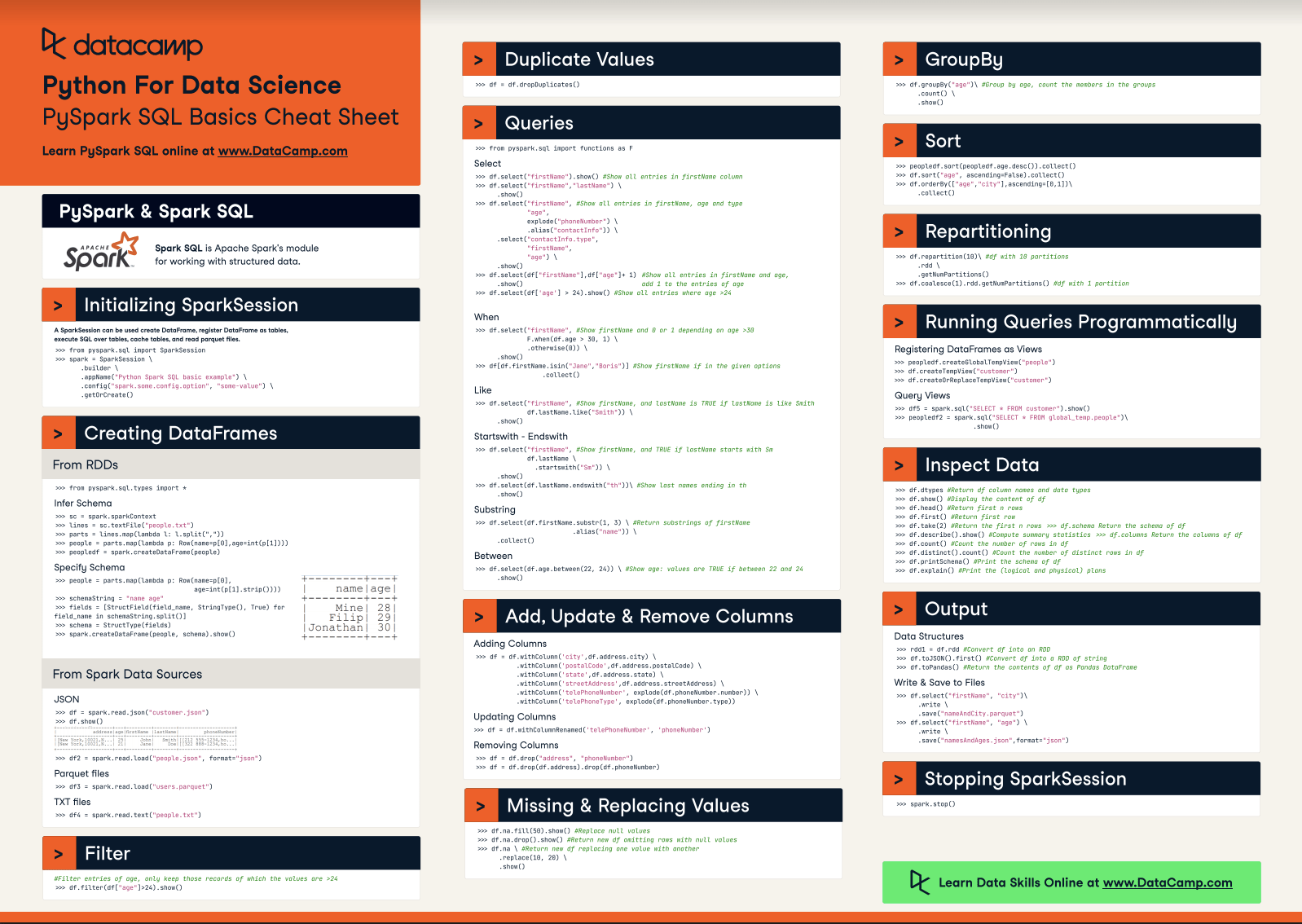

Pyspark Cheat Sheet Spark Dataframes In Python By Karlijn Willems Pyspark tutorial: pyspark is a powerful open source framework built on apache spark, designed to simplify and accelerate large scale data processing and analytics tasks. it offers a high level api for python programming language, enabling seamless integration with existing python ecosystems. advertisements. pyspark revolutionizes traditional. This method is used to create dataframe. the data attribute will be the list of data and the columns attribute will be the list of names. dataframe = spark.createdataframe (data, columns) example1: python code to create pyspark student dataframe from two lists. output: example 2: create a dataframe from 4 lists. Pyspark is an interface for apache spark in python. with pyspark, you can write python and sql like commands to manipulate and analyze data in a distributed processing environment. to learn the basics of the language, you can take datacamp’s introduction to pyspark course. this is a beginner program that will take you through manipulating. Pyspark collect list () and collect set () functions. pyspark sql collect list() and collect set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by or window partitions. i will explain how to use these two functions in this article and learn the differences with examples.

Pyspark Cheat Sheet Spark Dataframes In Python Datacamp Pyspark is an interface for apache spark in python. with pyspark, you can write python and sql like commands to manipulate and analyze data in a distributed processing environment. to learn the basics of the language, you can take datacamp’s introduction to pyspark course. this is a beginner program that will take you through manipulating. Pyspark collect list () and collect set () functions. pyspark sql collect list() and collect set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by or window partitions. i will explain how to use these two functions in this article and learn the differences with examples.

Comments are closed.